We’ve come across the human bias factor seeping into AI algorithms from time-to-time — the latest being Google Images showing pictures of a chimpanzee using Instagram when one searched for “Black Person Scrolling Instagram.” Just last year, we came across another incident of a Google search showing addresses of girls’ schools and hostels when users searched for “Bitches Near Me.”

What further aggravates this situation is Google’s desire to answer every possible question on its own. We all know that one can simply open google.com and get direct answers related to the weather, currency conversion queries, facts related to popular personalities, etc. However, this quest to keep users on Google’s interface and to reduce their dependency on the websites that create content comes with deeper concerns.

On many occasions, different advocates of the open web and independent publishers have complained over Google’s attempt to steal publishers’ traffic. For tons of search queries, Google takes data from different content-based websites and displays direct answers without providing any link to the websites. The search giant often curates accurate content with the help of publishers and then displays the results as if all the work is done by Google itself. I’ll be explaining this in more detail later in the article.

As this becomes the future, an interesting thing happens… the value of creating useful, accurate content for the web diminishes, b/c while publishers do the work, Google alone gets all the benefits. Their trojan horsing of the web is subtle, but damned effective. https://t.co/dydWQSbUju

— Rand Fishkin (@randfish) February 23, 2019

Some answers aren’t that simple

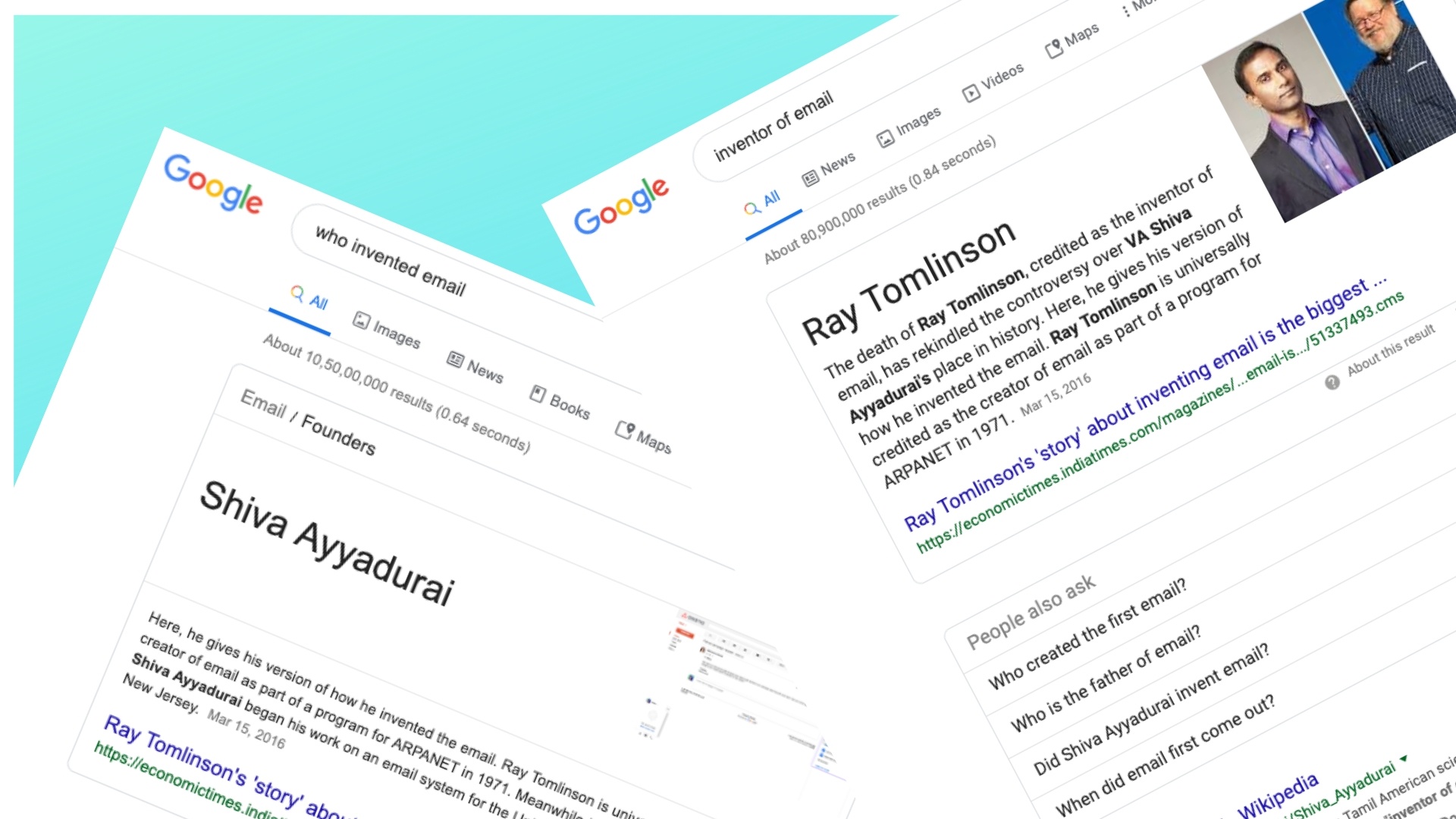

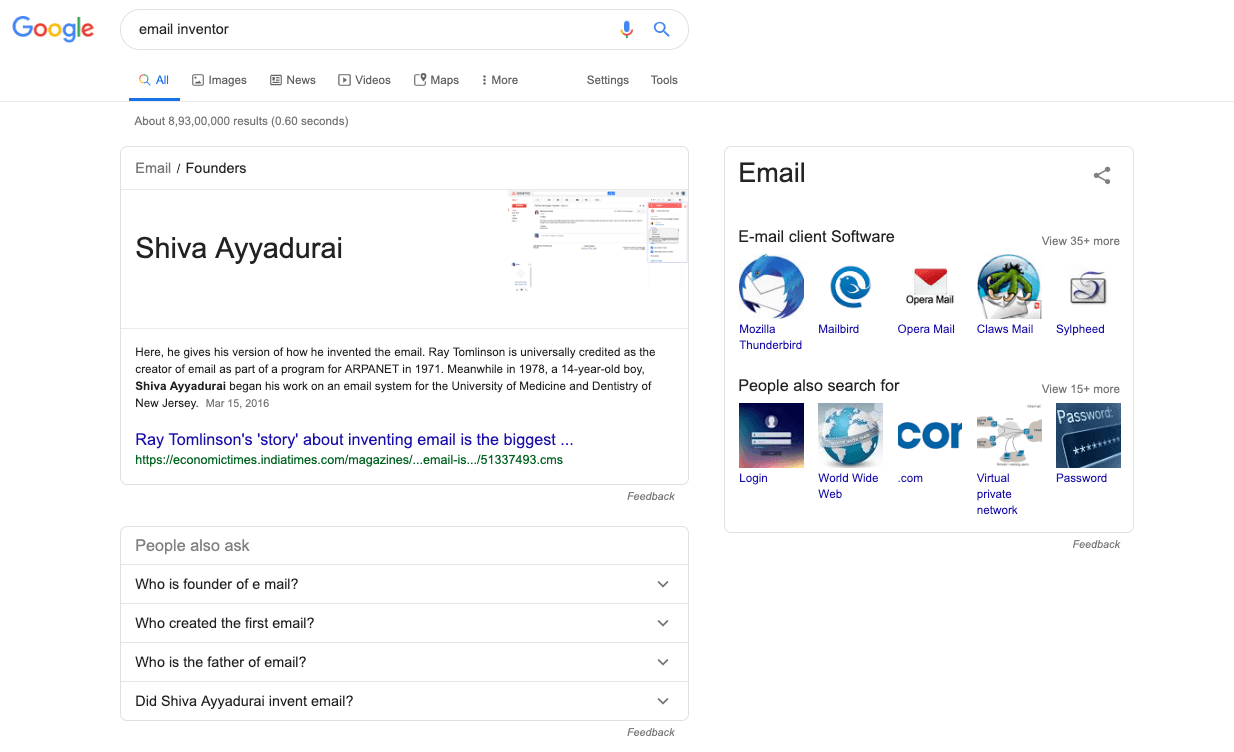

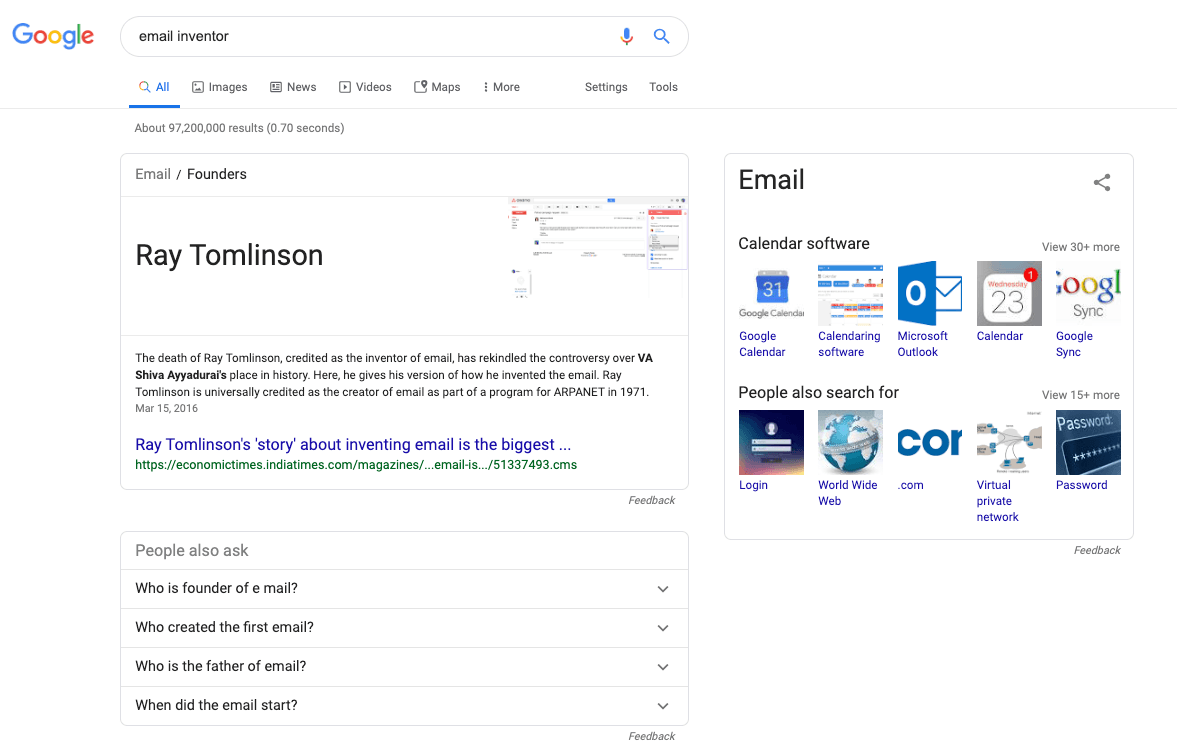

Along similar lines, a particular search query attracted my attention earlier this week, which also became an inspiration to write this article. If you search for “email inventor” on Google India, you’ll get the following result that labels Shiva Ayyadurai as the person who created the email —

If you do the same by changing your location to the United States, the answer changes to Ray Tomlinson —

If you are aware of the history of email and controversy regarding its original founder, these two names might sound familiar to you. If that’s not the case, you can read this article to understand the topic in detail.

Now, moving on to the matter of different results just by changing my location. I understand that Google takes into account lots of factors and personalizes the results as per the searcher — that’s why I even opened the Incognito Mode and used a VPN to get these results. However, even if I didn’t take those measures, how could Google show two answers to a fact-based question? It’s similar to getting two different answers for a question like who is the Prime Minister of India — imagine Google showing Narendra Modi’s name in India and Donald Trump’s name in the US.

Featured Snippets and Fake News

What’s even more interesting is that Google is picking up the answer from the same article published in Economic Times in both cases. This pulling of direct answers from a particular source on the web is what this issue is all about.

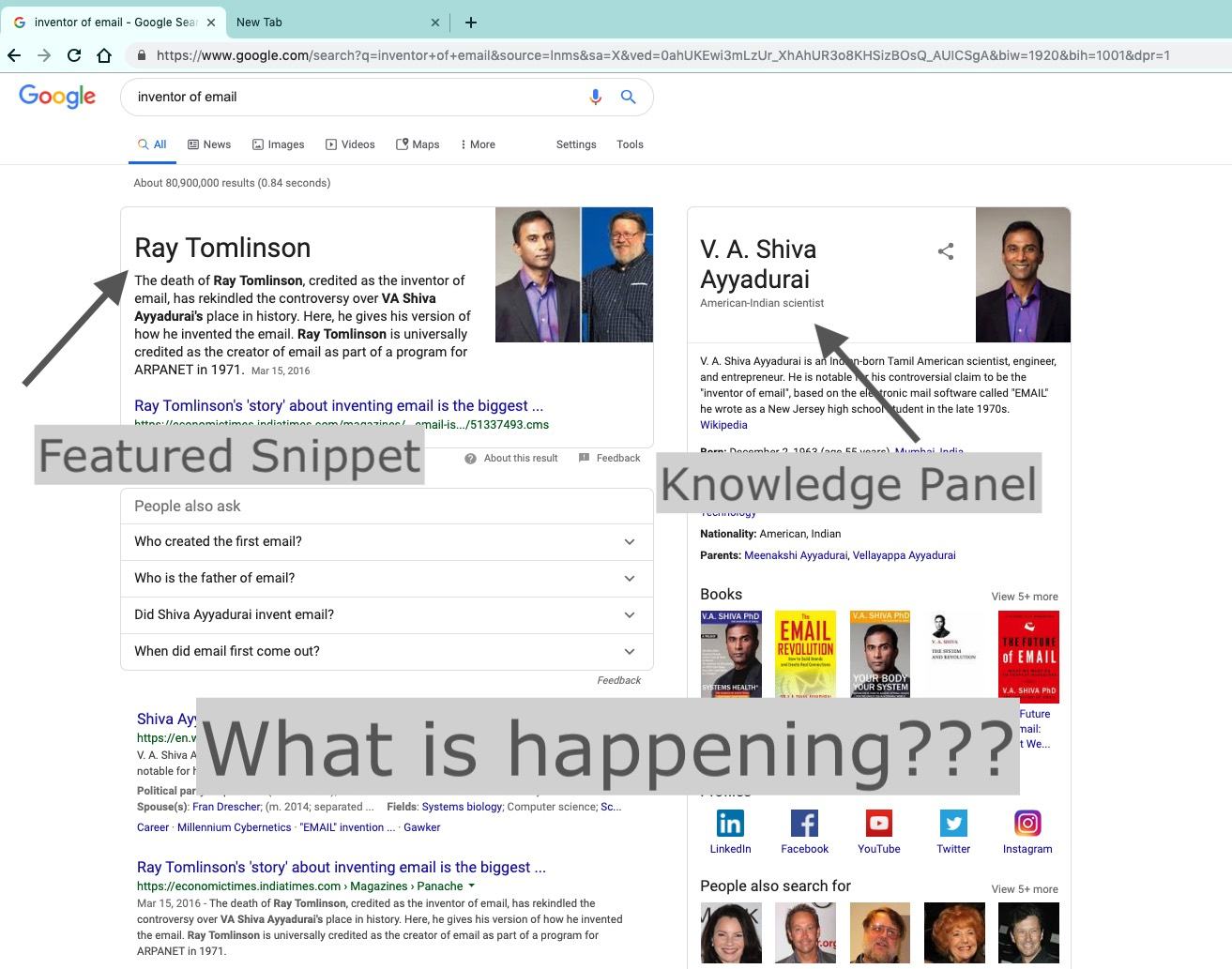

Commonly known as Featured Snippets in the world of search engine optimization (SEO), these cherry-picked answers provide a direct answer right at the top of the search page. These Featured Snippets work just like Google’s own Knowledge Panels, which provide similar information extracted from Google’s index and other reputed sources like Wikipedia. While Google doesn’t attribute websites other than Wikipedia in Knowledge Panels, it does so in Featured Snippets.

Google keeps testing new features and uses its AI-powered algorithms, commonly known as Rankbrain, to determine what’s best for a user. However, on many occasions, the search results don’t make much sense. For example, for the search query “inventor of email,” Google is showing two different answers on a single page. One result is being shown in the form of a knowledge panel and the other is in a featured snippet. This example certainly shows the broken state of Google Search. Let’s suppose you’re a school-going kid who’s preparing for a weekly general awareness test, which answer would you rely on?

As per the SEO industry data, Google is continuously expanding the Featured Snippet to more and more queries to prove that it’s the best search engine around and it has got all the answers that you need. But what about the topics that are controversial? Why is Google even attempting to provide a direct answer to a query like “Should India give up Kashmir?”

How can Google try to show a direct answer to a query like “Dog vs cat debate” by pulling the answer from an article titled “20 Ways Cats Are Better Than Dogs?”

Furthermore, if you search the web, there are countless examples of Google Search being fooled to show outrageous results, and on some occasions, they can even help propagate fake news. The situation is so dire that any attempt to curb fake news is always appreciated. Thus, we recently tried our best to help our readers in identifying fake news on social media.

The situation is even worse on Google voice search performed on Google Home devices. There, you don’t have an option to scroll down and look at the articles written by various publications to highlight different opinions.

Google’s brutish effort to create a Featured Snippet for every possible question is futile at worst and catastrophic at best. It is single-handedly disrupting the ad-revenue from those publishers who dig deep and break important stories and giving us out-of-context answers to politically sensitive questions. I know this for a fact that even political experts wouldn’t dare answer a question like ‘To Whom Does Northern Ireland Belong?’ in four lines.

It is frantic behavior like this that prompts lawmakers into making equally controversial laws. The EU copyright directive is a good example of such a law which seeks to put the power of publishing and copyright ownership back into the user.

Google Search and AI

With the increasing push from its competitors like Alexa, Cortana, and Siri, Google is expected to take more steps to try to provide direct answers for more search queries. This brings us to two important questions — Does Google need to curate a team of human experts who can monitor these direct answers and take the required corrective measures? Where do you think we need to draw the line when it comes to algorithms and their interference in our daily lives?

We often cite “biased training data” as the primary reason for the AI bias, which is true in many cases. For instance, it was found in research that self-driving cars are more likely to hit dark-skinned people. There are chances that the machine learning systems used by autonomous driving companies don’t use a dataset that has enough pictures of dark-skinned people. If the AI doesn’t know what dark-skinned people look like, how is it going to spot them and prevent an accident?

The case of Google Search is a bit more layered. It’s a result of the combination of various information shared by the users and Google’s own proprietary algorithms. Google often looks for correlation between different terms on the web and uses its AI to show a result. However, correlation does not always equal causation. Just last year, a search on Google for ‘Feku’ lead to the images of Prime Minister Narendra Modi. This happened because it’s a less-than-flattering name used for Mr. Modi and Google established a correlation between the two terms by using its AI to analyze the information on the web and showed the results. In a similar incident, on typing ‘idiot,’ Google displayed stories related to Donald Trump.

It’s worth noting that the engineers at Google and other AI experts are working continuously to find a solution to these AI-related problems. Just recently, MIT proposed the term “Machine Behaviour” to describe the myriad of related threats. Efforts like these are appreciable as AI is surely going to play an even bigger role in our society in the near future. Google Search’s erratic behavior on some occasions is just a small piece of the complete puzzle. We need to outline a set of questions from the legal, technical, and ethical aspects and look for their answers if we are perceiving AI as a companion that’ll help us augment the human potential.