Predator – Anti-Automation System

Predator is a prototype web application designed to demonstrate anti-crawling, anti-automation & bot detection techniques. It can be used as a honeypot, anti-crawling system or a false-positive testbed for vulnerability scanners.

Warning: I strongly discourage the use of the demonstrated methods in a production server without knowing what they exactly do. Remember, only the techniques which seem usable according to the web application should be implemented. Predator is a collection of techniques, its code shouldn’t be used as is.

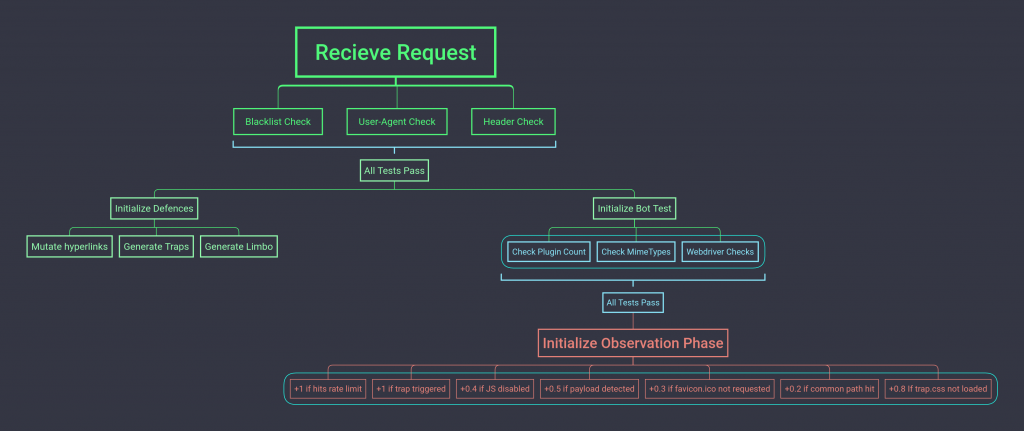

The mind map below is a loosely made visualization of how the techniques demonstrated here can be implemented in a production environment.

Note: The numbers and factors in “Observation Phase” can be used to set a reputation to a client which then can be used a strong indicator of malicious activity once a threshold is hit.

Techniques Used

Bot Detection

User-Agent and Header Inspection

HTTP headers sent by bots are often in a different order when compared to a real browser or lack altogether. Many bots disclose themselves in the User-Agent header for the sake of ethics while others don’t send one at all.

Webdriver Detection

Most of the HTML mutation techniques described here can be bypassed with browser-based frameworks such as selenium and puppeteer but they can be detected with various tests as implemented in isBot.js.

Resource Usage

Most of the bots only make requests to webpages and images but resources files such as .css are often ignored as they aren’t downloaded by the HTTP implementation in use. Bots can be detected when the ratio of webpages/images and such resource files becomes higher than a predefined threshold.

Malformed HTML

A lot of HTML parsers used in crawlers can’t handle broken HTML as browsers do. For example, clicking the following link in a browser leads to page_1 but affected parsers parse the latter value i.e. page_2

<a/href=”page_1″/href=”page_2″>Click</a>

It can be used to keep off and ban crawlers without affecting user experience.

Invisible Links

Some links are hidden from users using CSS but automated programs can still see them. These links can be used to detect bots and take a desired action such as banning the IP address.

Bait Links

When Predator suspects that the visitor is a bot, it generates a random number of random links which direct to a page (limbo.php) containing more random links and this process keeps repeating.

Signature Reversing

Vulnerability scanners usually enter a payload and see if the webapp responds in a certain way. Predator can pretend to have a vulnerability by including expected response i.e. signature within HTML.

Predator mimics the following vulnerabilities at the moment:

- SQL Injection

- Cross-Site Scripting (XSS)

- Local File Inclusion (LFI)

This method makes it possible to set up a honeypot without actually hosting any vulnerable code and serves as a testbed for false positive testing.

Download

git clone https://github.com/s0md3v/Predator.git

Copyright (C) 2019 s0md3v

![]()

The post Predator: Anti-Automation System appeared first on Penetration Testing.