Recent events have shown that the artificial intelligence (AI) research branch of Microsoft was the victim of a serious data disclosure breach. An incorrect configuration of an Azure storage account led to the disclosure of 38 gigabytes of confidential internal Microsoft data, as was discovered by a cybersecurity group known as Wiz.

When researchers from Microsoft shared open-source AI training data on GitHub, that’s when the data breach happened. Users were given a URL to retrieve the data from an Azure storage account, which was supplied by them. However, the access token that was sent inside the GitHub repository included an excessively broad set of permissions. It granted read-and-write access to the whole of the storage account, not just the data that was supposed to be accessed.

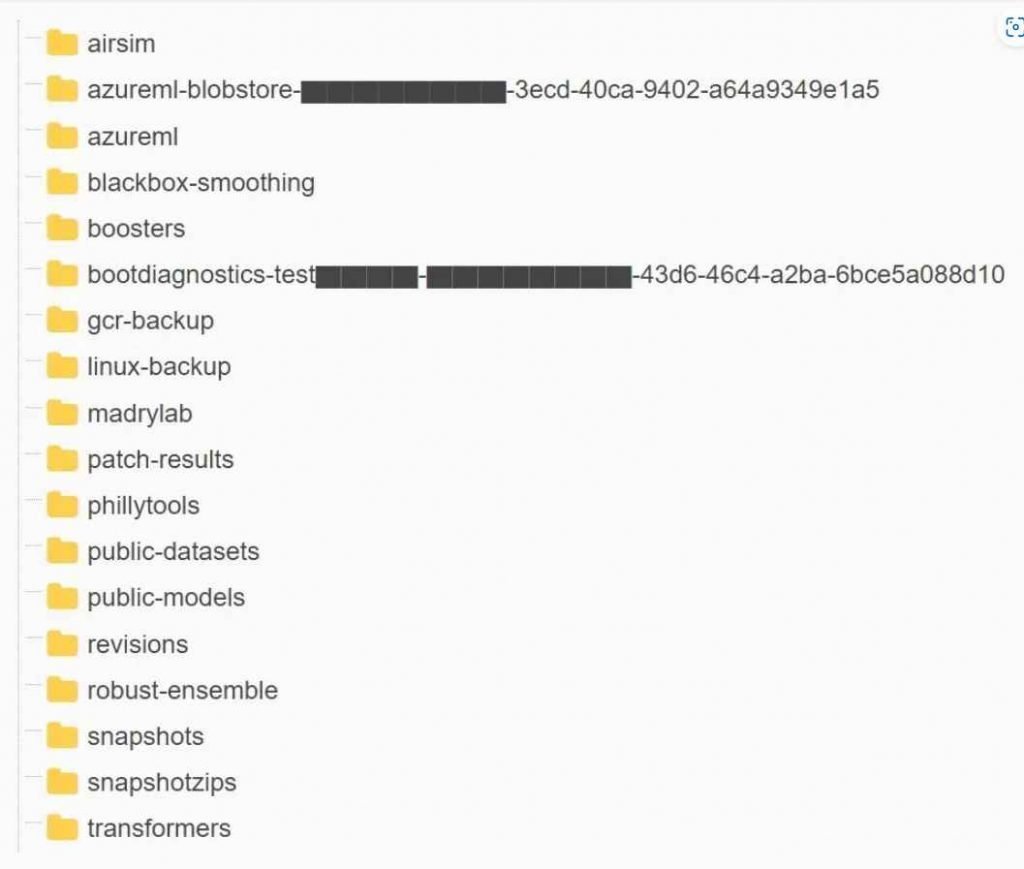

Wiz made the discovery that this account included 38 terabytes worth of confidential Microsoft data, which included the following:

Backups of the machines used by employees, which may include passwords, secret keys, and internal communications sent via Microsoft Teams.

Over 30,000 private communications sent by 359 Microsoft workers using the Microsoft Teams platform. The underlying problem was that Azure Shared Access Signature (SAS) tokens were being used without the appropriate permissions being scoped. Access to Azure storage accounts may be controlled at a finer grain using SAS tokens. On the other hand, if the configuration is not done correctly, they might provide an excessive number of permissions. A Shared Access Signature (SAS) token in Azure is described as a signed URL that provides access to Azure Storage data by the Wiz team. This information can be found on the Azure website. The user is able to modify the access level to their liking; the permissions may vary from read-only to full control, and the scope can be a single file, a container, or the whole storage account.

Additionally, the user has total control over the expiration time, giving them the ability to generate access tokens that never expire.

Instead of providing read-only access to the storage account, the token in this instance granted complete control of the contents of the account. In addition, there was no date of expiration, which meant that access would be granted forever.As a result of a deficiency in monitoring and control, SAS tokens provide a potential threat to data security; hence, their use should be restricted to the greatest extent feasible. Because Microsoft does not provide a centralized method to handle these tokens inside the Azure interface, keeping track of them may be an extremely difficult task. In addition, the duration of these tokens may be customized to practically endure forever, and there is no maximum age at which they can be used. Consequently, it is not a secure practice to use Account SAS tokens for external sharing, and users should refrain from doing so. – In addition, the Wiz Research Team said.

Because there is a lack of control, Wiz suggests putting restrictions on how account-level SAS tokens may be used. In addition, separate storage accounts should be used for any and all reasons involving external sharing. It is also recommended to do appropriate monitoring as well as security evaluations of shared data.

Since then, Microsoft has invalidated the exposed SAS token and carried out an internal evaluation of the damage it may have. Additionally, an acknowledgment of the occurrence can be found in a recent blog post written by the corporation.

Because more of an organization’s engineers are now working with enormous volumes of training data, this story illustrates the additional dangers that businesses face when beginning to utilize the capability of artificial intelligence more generally. The enormous volumes of data that data scientists and engineers work with need extra security checks and precautions as they work to get innovative AI solutions into production as quickly as possible.

Information security specialist, currently working as risk infrastructure specialist & investigator.

15 years of experience in risk and control process, security audit support, business continuity design and support, workgroup management and information security standards.