We are living in an era where computing chips are getting smaller and smaller every day. So, the idea of a giant-sized silicon chip only seems to be a thing from the past.

Turns out, not everyone thinks so, and that’s why we are seeing a new AI chip designed by a California-based startup called Cerebras Systems.

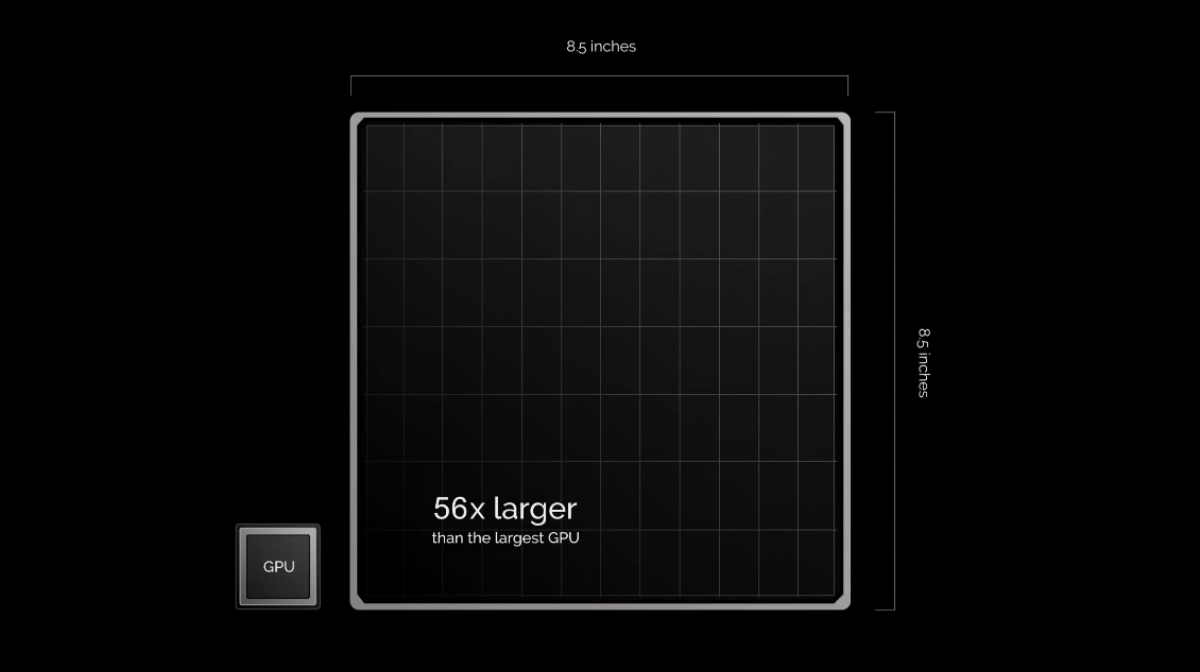

In terms of surface area (46,225 sq mm), Cerebras Wafer-Scale Engine (WSE) takes a little less space on the table than a 13-inch laptop would do. For instance, Dell XPS 13 is almost 60,000 sq mm (Width: 304mm, Depth: 200mm) in area.

WSE is almost 56 times bigger than the largest GPU and uses that extra real estate to offer more computing power, around 3,000 times more on-chip memory, and 10,000 times more memory bandwidth.

Of course, such massive chip isn’t going to fit in your smartphone. Cerebras WSE is designed to give a boost to deep learning computations and speed up AI research, leveraging popular frameworks like TensorFlow and PyTorch.

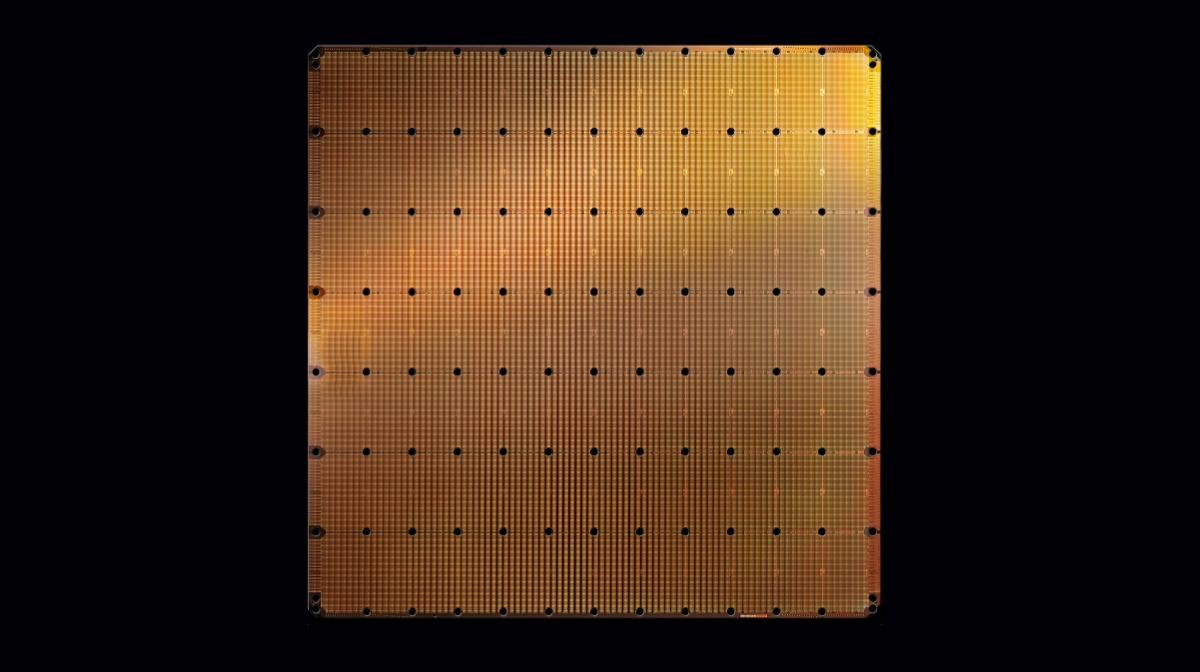

On its 46,225 sq mm surface area, it packs 1.2 trillion transistors and 400,000 AI-optimized cores. For comparison, the largest GPU available right now (815 sq mm) offers 21.1 billion transistors.

The high-performance AI cores are connected to each other “on silicon by the Swarm fabric in a 2D mesh” and can achieve a jaw-dropping bandwidth of up to 100 petabytes per second.

WSE aims to significantly reduce the time required to train AI models and also enable AI experts to find unexplored areas of research.

Cerebras’ product management director Andy Hock says in his blog post that currently available GPUs fail to do justice to the “unique, massive, and growing computational requirements” of deep learning. These chips are not fundamentally designed for it.

The lack of appropriate computing power makes it longer to test new hypothesizes and train new models, which in turn increases the overall costs. He calls for the development of purpose-built chips that can match the performance levels required for handling AI workloads, finishing them in minutes and not months.

“We’ve built DSPs for signal processing; switching silicon for packet processing; GPUs for graphics. In the same vein, we need a new processor – a new engine – for deep learning compute,” says Hock.